by Jacob Beasley, Director of DevOps

Modern organizations often generate a large amount of data from a wide variety of daily business activities like financial transactions, medical records, student records, etc. How can your company handle processing and aggregating this data on a regular basis into a central place for reporting, such as a data warehouse? Batch jobs are one common solution. Batch jobs are scheduled programs that run repetitive tasks with minimal user interaction. It helps businesses to improve efficiency by prioritizing time-sensitive jobs and scheduling batch jobs to run at a specific time like “after-hours” for faster performance when shared resources are most available.

Data is crucial for businesses. Utilizing data and turning it into something useful can give much needed insight for business performance and help business leadership to make better decisions. Data collected from business operations can come from one or more sources.

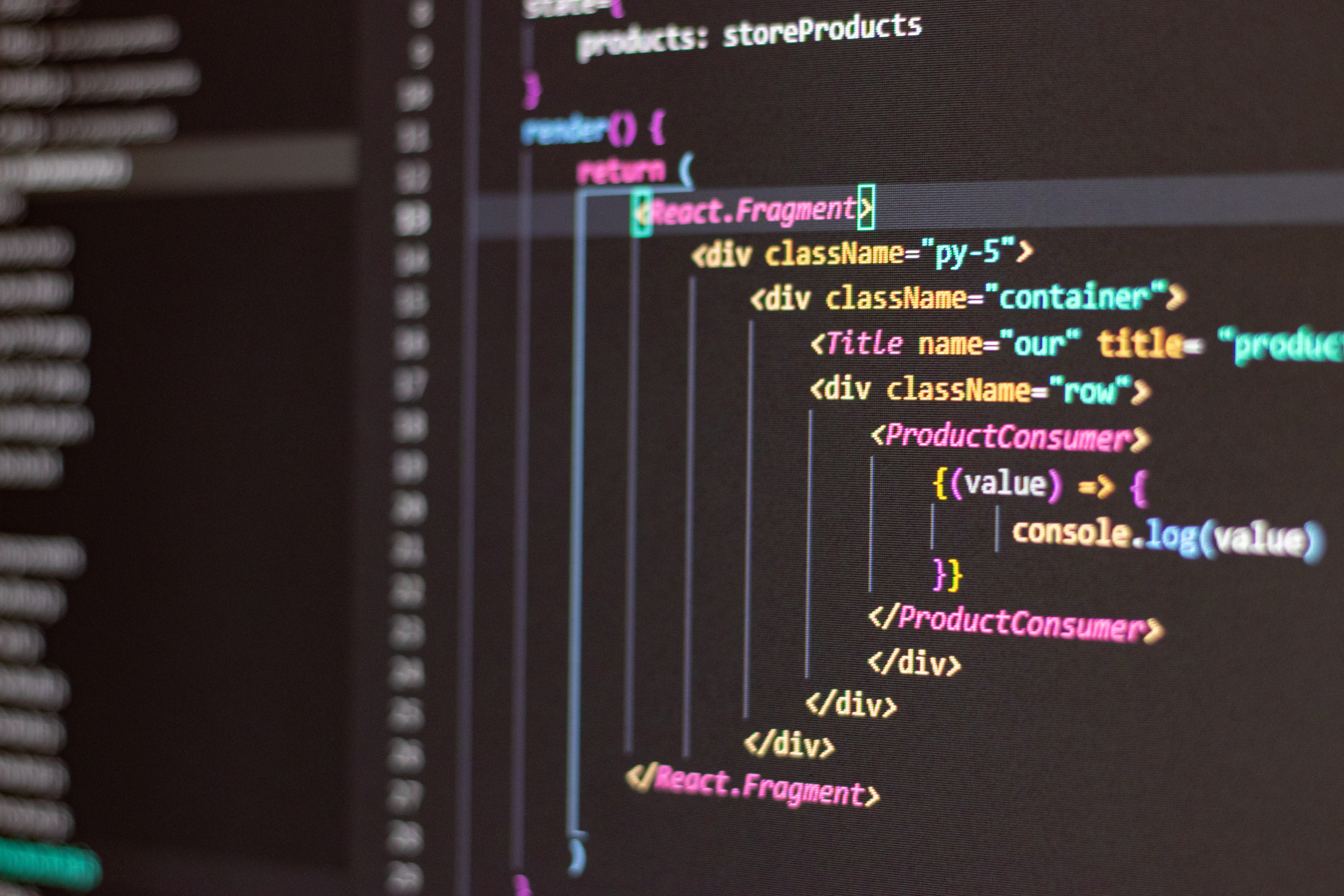

ETL (Extract/Transform/Load) software packages offer a reliable “off-the-shelf” tool with a friendly user interface to join data sources together in a unified format. With combining both the advantages of ETL and batched jobs, businesses can streamline their data integration and processing efforts.

We chose to use Talend, a common ETL tool that compiles into a Java program that can be built and run on a server or, in our case, on Kubernetes. We chose Kubernetes cron scheduler as a scheduling engine because we already deployed the rest of our software for this customer on Kubernetes and because their cron scheduler worked like we wanted, let us run the jobs on existing servers (no new hardware costs), was free (no new software costs), and has alerting/monitoring available using Prometheus Alert Manager.

Challenges

Writing Software

You can certainly write your own ETL software for data integration but writing software can be time consuming and costly. Fortunately, many ETL products are already available on the market to serve such purposes. Some products that come with extensive features and support may have a hefty price tag, but there are also free products available to cater to different needs, such as Talend or Apache Camel. We chose to use the free version of Talend.

Packaging

We want to package up our etl/batch job (code and all its dependencies, such as the Java VM and various Java libraries) as a container so that it is guaranteed to run the same way that it runs when testing on our local machine as well as when it is deployed to our servers.

Configuration Management

We will set it up to run on a regular schedule. In addition, we will need to encrypt our database passwords and not to leave them in any logs, code repository, docker registry, and compiled artifacts.

Solution

Talend

Talend was founded in 2005 by two entrepreneurs, Fabrice Bonan and Bertrand Diard, to modernize data integration.

There are many ETL tools available to use. Talend is a great data integration tool which has a relatively easy to use interface and it allows for quick builds and testing locally. Talend enables management of a large number of data sources. You can easily pull data from one database and load to another. For data transformation and processing, you can manipulate and transform your data in a custom workflow between your primary input and primary output. Talend comes with components for aggregating, filtering, and sorting. It also comes with more advanced components for merging or splitting input and output, pinging a server, and implementing custom rules and code in Java.

Talend has several different flavors, each with different features. We chose to use the free version, which does not have a built-in scheduler/runner application. Others come with more features, including the Talend Administrator Center for job scheduling and monitoring. This Administrator Center provides you with GUI tools for managing components and scheduling. Talend Open Studio, which is what we used, is a free open source version and it is a very powerful tool in itself, especially when combined with Docker and Kubernetes to schedule the jobs.

Packaging with Docker

There are many packaging tools like Docker, Vagrant, and LXC Linux Container for developers to choose from. We chose Docker because it enables developers to “easily pack, ship, and run any application as a lightweight, portable, self-sufficient container, which can run virtually anywhere.” It is open source and widely used by many developers.

Security Concerns

Sensitive information like passwords, SSH private keys or SSL certificates should not be transmitted unencrypted over a network. We will not store login credentials in a Dockerfile, in application’s source code, or code repository. In this regard, Talend is not the most helpful tool because, by default, Talend exports contexts (where you typically configure passwords) in the compiled Java archives.

We cannot allow these files to be included in our container for security reasons, so we have to create a .dockerignore file to tell Docker to ignore all of the job’s context files

Example Dockerfile

FROM alpine

# INSTALL DEPENDENCIES

RUN apk add openjdk8-jre unzip

# COPY IN OUR CODE

WORKDIR /app

COPY sample_job /app/sample_job

RUN rm sample_job/contexts/Default.properties

# RUN THIS TO START APP

ENTRYPOINT ./sample_job_run.sh

Kubernetes CronJob

The term “Cron” comes from the Linux program called “crontab” that is used to schedule regularly occurring tasks on Linux. Like on Linux, Kubernetes Cron jobs are a way to schedule computer programs to run on regular intervals. It is a useful tool for creating periodic and recurring tasks. Unlike Linux crontab, Kubernetes will boot up the job on whatever server is available as well as provide tools for monitoring, retrying, and limiting concurrency of jobs.

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available.

Although the cron utility (using CRON TABle) in Unix has similarities to Kubernetes’ cron job, we chose Kubernetes over crontab on Linux for a few reasons:

- Crontab on linux would mean having a dedicated server for cron jobs, which is not ideal because…

- Single point of failure – if this server goes down, your jobs stop.

- No monitoring – crontab doesn’t email/alert if a job fails.

- Complicated to configure – user permissions and ENV vars for crontab can be awkward and confusing.

- Less Scalable – if a bunch of cron jobs happen at the same time on the same machine, it can easily overload the machine. Kubernetes spreads the load across multiple servers.

- Retries and concurrency not addressed – Kubernetes can prevent long-running cron jobs from running multiple times as well as automatically retry failed jobs.

First, we build our etl/batch job in Talend Open Studio. Then, with a few database components, connect them together and export to a file. Next, we make a Dockerfile with all the commands we call on the command line to assemble a Docker image. Then we push the image to a container registry for storage like Docker Registry (in this case we use a private registry). Finally, we use cron job in Kubernetes to set up scheduled recurring etl/batch jobs for us.

Although Talend’s paid solution comes with many features and support including scheduling tasks, it has very high pricing for the tasks we can complete with the free version Talend Open Studio and our existing Kubernetes service. Because we only had need for a few batch jobs, we did not feel it was appropriate to license Talend Open Studio.

Kubernetes SecretMap and ConfigMap

Kubernetes provides ways of configuring our application. We can then mount config files or set operating system environment variables that our Talend application can read and use.

Kubernetes ConfigMap

A ConfigMap is an API object used to store non-confidential data in key-value pairs. Pods can consume ConfigMaps as environment variables, command-line arguments, or as configuration files in a volume. A ConfigMap allows you to decouple environment-specific configurations from your container images to make your applications portable.

Here is an example of a Kubernetes Configuration Map

apiVersion: v1

data:

DATABASE_HOST: “somehost.mysql.azure.com”

DATABASE_PORT: “3306”

kind: ConfigMap

metadata:

name: sample-app

Kubernetes Secrets

Kubernetes Secrets let you store and manage sensitive information such as passwords, OAuth tokens, and ssh keys. Storing confidential information in a Secret is safer and more flexible than putting it verbatim in a Pod definition or in a container image. For our Talend etl/batch job, we set our passwords in a Kubernetes Secret and added to the container as an environment variable.

apiVersion: v1

data:

DATABASE_1_PASSWORD: secret_1

DATABASE_2_PASSWORD: secret_2

kind: Secret

metadata:

name: DB-secret

Configuring Talend to Use Environment Variables

We need to use credentials to access our databases and to find a way to save and access them securely without exposing the secrets. In Talend, we can set up different Contexts with or without secrets or with environment variables to run jobs. Here, we use environment variables for this job because the secrets only live in the process executed by the job. Once the process is done, they are no longer there.

We use System Environment Variables to configure connection parameters when deployed, and contexts when testing locally. That works out to the following code snippet:

System.getenv().getOrDefault(“POSTGRES_PASSWORD”, context.postgres1_Password)

System.getenv().getOrDefault(“AZURE_PASSWORD”, context.azure_connection_userPassword_password)

When Talend job is built, it will export a file and that file comes with all Contexts. We can exclude certain context with secret by using dockerignore so the Dockerfile will disregard that particular context when creating the Docker image. Similarly, we can exclude specific content by using gitignore when pushing our files to the remote repository. That way even if the Dockerfile or remote repository is compromised, the secrets are simply not present.

Conclusion

Working with a growing volume of data and variety of information can be challenging to businesses. We need a way to properly process such valuable information. With Talend Open Studio and Kubernetes, data integration can be efficient and affordable, enabling you to aggregate and report on data from across your business as well as integrate applications by synchronizing data between their databases.

Here are some references for you to get started:

Talend Open Studio for Data Integration User Guide

Are you ready to enhance and elevate your DevOps capabilities?

If so, reach out to our team! We deliver a full range of DevOps services and solutions that help propel transformative change for our customers. Our range of capabilities includes:

- Software Development

- Site Reliability Engineering

- Build and Support Services

- Secure Software and Systems

- Respond and Support Services

- Compliance and Security Capabilities

If there’s software that needs to be built, secured, or improved, our team can help. Feel free to reach out to us at connectwithu[email protected] or by filling out our brief Contact Us inquiry form if you’re interested in learning more about our DevOps services. We’re confident we can help you build reliable, secure, and powerful software that can truly move the needle for your organization and your customers.